How Audio Datasets Changing AI Models Deep Learning?

We are gradually moving towards a world where machine learning systems can understand what we say, from virtual assistants to security authentication and more. Many of us have used virtual assistants like Alexa, Siri, Google Assistant and Cortana in our daily lives. They can assist us in turning on our home’s lights, searching for information on the internet, and even starting a video conference. Many people are unaware that these technologies rely on natural language processing which requires AI training Datasets.

What is ASR?

Automatic Speech Recognition, or ASR for short, is the technology that enables humans to use their voices to communicate with a computer interface in a manner that, in its most sophisticated variations, resembles normal human conversations. Natural Language Processing, or NLP for short, is the most advanced version of currently ASR technologies.

This variant of ASR comes the closest to allowing real conversations between machine intelligence, and while it still has a long way to go before reaching its extreme, we’re already seeing some remarkable results in the form of intelligent smartphone interfaces like the Siri Assitant on the iPhone and other systems used in business and advanced technology contexts.

How do ASR models work?

The following is the basic sequence of events that causes any Automatic Speech Recognition software, regardless of sophistication, to pick up and break down your words for analysis and response:

- You communicate with the software via an audio feed.

- The device to which you are speaking generates a WAV file on your words.

- Background noise is removed from the WAV file, and the volume is normalised.

- The filtered WAV form that results is then broken down into phonemes.

(Phonemes are the fundamental building blocks of language and words. English has 44 of them, which are made up of sound blocks like “wh”, “th”, “ka”, and “t”.

- Each phoneme is like a chain link, and the ASR software uses statistical probability analysis to deduce whole words, and, from there, complete sentences by analysing them in sequence, beginning them with the first phoneme.

- Your ASR can now respond to you in a meaningful way because it has “understood” your words

Speech Collection for training ASR models

It is critical to collect large speech and audio datasets to ensure the maximum effectiveness of your ASR models. The goal of speech collection is to aggregate a large enough sample set to feed and train ASR models. These speech datasets will be compared in the future to the speech of unknown speakers using specified speaker recognition methods. Speech collection for all target demographics, languages, dialects, and accents is required for ASR systems to function properly.

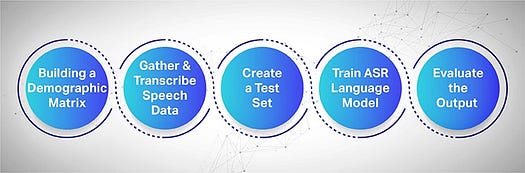

Artificial intelligence can only be smart as the data it is fed. To train an ASR model effectively, large amounts of speech or audio data must be collected. We’ve outlined the steps for gathering speech data to effectively train your machine learning models:

- Construct a demographic matrix: Consider things like location, language, gender, age, and accent. Take note of the various environments (a busy street, an open office, or a waiting room), and how people use devices like smartphones, desktops or headsets.

- Gather and transcribe audio data: To train your model, collect data and speech samples from real people. Human transcriptionists will be required in this step to take notes of long and short utterances as well as key details that follow your demographic matrix. Humans are still required to create properly labelled speech and audio datasets to serve as a foundation for future application and development.

- Create a separate test set: Now that you have your transcribed text, combine it with the corresponding audio data and segment it into one statement per segment. Take the segmented pairs and extract a random 20% data to form a testing set

- Develop your language model: Create new text variations that we were not previously recorded. When cancelling orders, for example, you only recorded the statement, “ I want to cancel my order.” you can include “Can I cancel my subscription?” and “ I want to unsubscribe” in this step. You can also include appropriate expressions and jargon.

- Iterate and measure: To benchmark performance, evaluate the output of your ASR. Measure how well the trained model predicts the test set. Involve your machine learning model in a feedback loop to close any gaps and produce the desired results.

Applications of speech recognition

Aside from virtual assistants, speech recognition systems are used in a variety of industries:

- Travel: According to the Automotive world, by 2028, 90% of new vehicles sold will be voice-activated. Voice data is used by applications such as Apple CarPlay and Google Android Auto to activate navigation systems, send messages, and switch music playlists in a car’s entertainment system. BMW collaborated with Microsoft Nuance to power the BMW Intelligent personal assistant, which was first introduced in the BMW 3 series. The AI-powered digital companion allows drivers to operate their car and access information, such as the car manual by simply speaking.

- Food: McDonald’s and Wendy’s are improving their customer service by implementing automatic speech recognition. The voice data is transcribed by an AI platform and given to the cooks for preparation. Speech recognition system integration results in faster and more frictionless interactions, as well as lower labour costs.

- Entertainment: Youtube’s AI-powered audio features now include live auto-captions. This means that creators can now do live streams with captions that appear at the bottom of the screen automatically. This ASR feature will be available in more languages in the near future, making streams more inclusive and accessible.

How can GTS help you?

Algorithms must be trained on large amounts of written or spoken data that has been annotated based on parts of speech meaning, and sentiment in order to understand natural language. Here’s what Global Technology Solutions brings to the table: Expertise and Experience in collecting datasets such as Audio Datasets, Text Dataset, Video datasets, and Image datasets, and enhancing text and speech data for machine learning.

.png)

.png)

Comments

Post a Comment